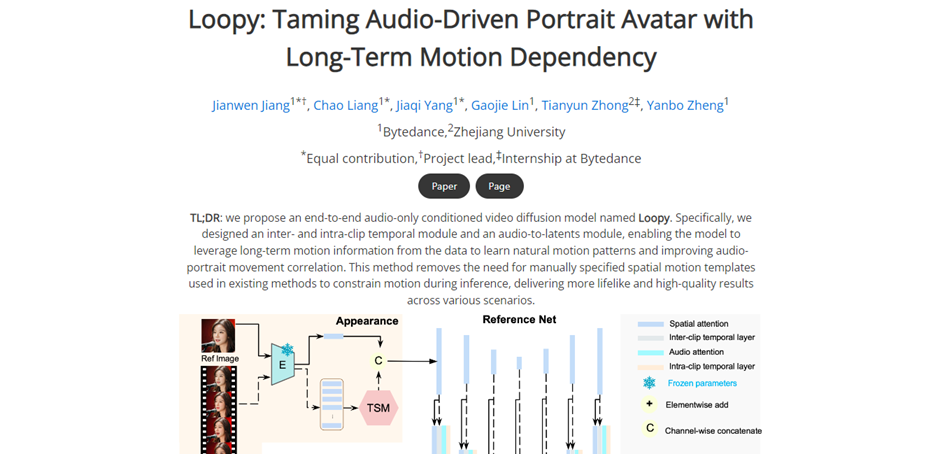

CONTEXT: Loopy by Bytedance is an AI-powered platform designed to create lifelike, audio-driven portrait avatars by leveraging long-term motion patterns.

video tutorial

Details

update: october 12, 2024

Loopy utilizes AI to generate realistic motion in portrait avatars conditioned solely on audio. Key features include:

- Audio-Driven Portrait Avatars: Generates lifelike portrait avatars that respond to audio inputs, creating natural movement patterns.

- Long-Term Motion Dependency: Leverages long-term motion data to improve the correlation between audio and motion, providing more realistic animations.

- End-to-End Video Diffusion Model: Uses a diffusion model that removes the need for manually specified motion templates, allowing for a more seamless avatar generation process.

- Inter- and Intra-Clip Temporal Module: Incorporates temporal motion information to improve the natural flow of animations across different audio inputs.

- Audio-to-Latents Module: Maps audio data to latent representations, enabling the generation of motion based on various speech and non-speech audio.

- Motion Diversity: Adapts avatar motion to different audio inputs, producing diverse results ranging from rapid speech to soothing tones.

- Lifelong Motion Patterns: Models long-term natural motion, including eyebrow movements, head movements, and non-verbal cues such as sighing.

- Realistic Non-Speech Movements: Supports nuanced non-speech movements driven by audio, such as emotion-driven expressions and subtle gestures.

Loopy allows for the creation of high-quality, lifelike avatar animations that respond dynamically to audio inputs without requiring complex spatial templates.